Sep 18, 2025 Sina Schäfer

ShareFoundation models are powerful AI models that have been pre-trained on extensive datasets and can be flexibly applied to a wide range of tasks and application areas. They form the technological foundation of many modern AI systems and enable the development of specialized applications on top of them. But what distinguishes them, how do they work, and what role do they play in practical use? This article provides a concise overview.

Definition: What are Foundation Models?

Foundation models are extensively pre-trained AI models based on a broad dataset of texts, images, or other multimodal information. Unlike specialized AI models, which are trained for a single, clearly defined task, foundation models learn from a wide variety of data and can flexibly transfer their knowledge to different tasks. The larger the model and the more comprehensive the training data, the better the performance in various application areas tends to be. They make use of modern architectures such as the transformer to efficiently process large amounts of data and capture relationships across different contexts.

Typical applications built on foundation models include language assistants, image generators, or AI-based forecasting tools. Systems that can use learned knowledge to solve new tasks without needing to be reprogrammed each time.

Background and Context

The idea of developing a central model for many different tasks has become one of the most important trends in AI research in recent years. First steps in this direction appeared with the advancement of deep learning technologies around 2015. In particular, the transformer approach, introduced in 2017, enabled the efficient processing of large datasets while capturing contextual relationships more effectively.

On this basis, the first language models emerged that could not only perform individual tasks but could also be adapted for various purposes through fine-tuning. The term “foundation model” was eventually coined in 2021 in a research paper by Stanford University, which systematically described this new class of models. With GPT-3, OpenAI in 2020 demonstrated the potential of these models beyond research. Well-known examples of this model class include GPT-4 (OpenAI, 2023), DALL·E 2 (OpenAI, 2022), and BERT (Google AI, 2018).

How do Foundation Models Work?

Foundation models leverage large amounts of training data to identify statistical regularities. Language models, for instance, predict which word is most likely to follow another. Image models, by contrast, analyze and reconstruct visual patterns.

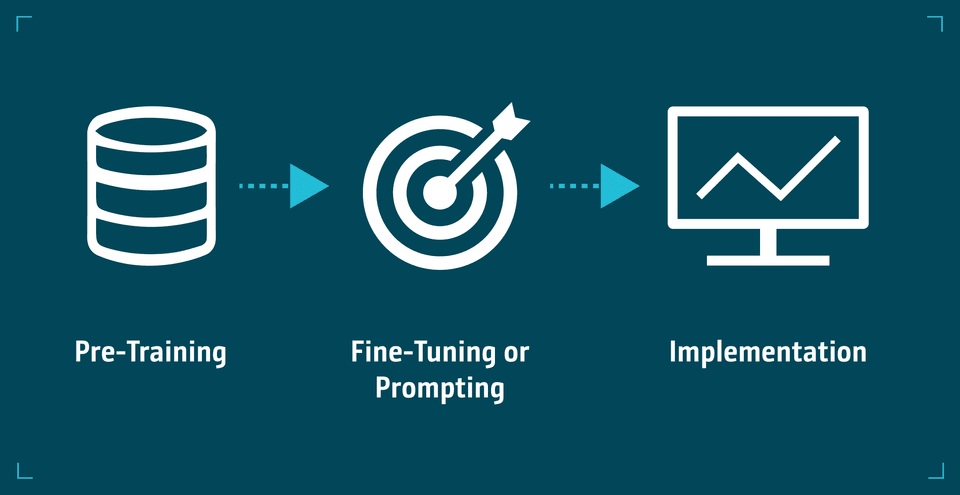

The development process typically takes place in three phases:

- Pre-training: The model is trained on broad, general data to learn fundamental patterns and relationships. This often involves so-called self-supervised learning, a method where the model learns directly from the data without requiring manual labeling.

- Fine-tuning or prompting: The pre-trained model is adapted or guided for specific tasks, e.g., through example prompts or supplementary domain-specific data.

- Implementation: The model is ready to receive new input data and generate predictions based on the patterns learned during pre-training and fine-tuning.

Application at INFORM

INFORM uses foundation models to seamlessly integrate AI-powered forecasting into its software solutions and significantly enhance their performance. One example is demand planning in supply chain management. Unlike traditional time series methods, which rely on rigid formulas and model each data sequence in isolation, INFORM leverages a global foundation model trained on millions of real sales time series. This model recognizes typical demand patterns across different data sources and can contextually transfer them to new products. This makes it possible to include context-dependent factors such as product type, saturation effects, or marketing activities. The result is more differentiated and robust forecasts, even for items with only limited historical data or for newly emerging demand patterns.

Advantages of Foundation Models

- Versatility: Foundation models can be adapted for a wide variety of tasks, such as text generation, image recognition, translation, or analysis. Once trained, a model can be applied to many different scenarios with minimal additional effort.

- Efficient fine-tuning: Instead of developing a new model for each task, it is often sufficient to fine-tune an existing base model. This saves time, reduces costs, and enables faster implementation of customized applications.

- Scalability: Thanks to their universal structure, foundation models can be easily transferred to new data and application areas. This makes it easier for companies to automate and further develop different processes with a single model.

- High quality of results: Because they are trained on very large and diverse datasets, foundation models achieve remarkable expressiveness and accuracy, especially in language processing or multimodal tasks.

- Drivers of innovation: Foundation models are considered a central foundation for many recent advances in AI. They accelerate research and development, enable new product ideas, and are transforming the way AI is used in practice.

Challenges in Using Foundation Models

- High infrastructure requirements: Developing a foundation model from scratch requires enormous computing resources and investments. Training can take weeks or even months.

- High energy consumption: Large models in particular require massive computing power, which results in significant electricity consumption. The carbon footprint of such training runs is therefore an important sustainability factor that is increasingly coming into focus.

- Dependence on large datasets: Foundation models need a broad and diverse data basis to be effective. If access to suitable data is limited, the quality of the model suffers significantly.

- Limited contextual understanding: Despite their impressive results, these models lack true understanding of intent or social context. They do not possess world knowledge in a human sense, nor do they have psychological or situational empathy.

- Lack of reliability: The generated outputs may be imprecise, misleading, or even problematic. For example, when producing inappropriate, toxic, or simply incorrect content.

- Risk of bias: Since foundation models learn from massive datasets, there is a danger that they will replicate existing societal prejudices, stereotypes, or discriminatory language. To counteract this, careful selection and preparation of training data is essential, along with ethically sound model design.

FAQ on Foundation Models

What is the difference between foundation models and LLMs?

Foundation models and large language models (LLMs) are closely related but not identical. LLMs are specifically designed for processing and generating text. Foundation models, however, are a broader concept: they include not only language models but also models that work with other data types such as images, speech, or video. Both share central capabilities: recognizing semantic relationships between words, translating text, or generating context-related responses. LLMs can be considered a specialized subcategory of foundation models that focus exclusively on text.

How large are foundation models?

Foundation models are among the largest AI models ever built. They often consist of hundreds of billions of parameters, making them extremely powerful but also computationally intensive. However, for certain applications, smaller variants are gaining importance—models that are specifically trained or derived from larger models. These compact models can be operated more efficiently and integrated more easily into existing systems.

How can you determine whether a foundation model is suitable for your use case?

The suitability of a foundation model depends on the intended purpose: is it about text comprehension, image processing, or the combination of multiple data types? It is also important to determine whether general capabilities are sufficient or whether domain-specific knowledge is required. A good starting point is to experiment through existing pilot projects to test how reliably the model performs for the task at hand. Evaluation tools or providers such as INFORM can help identify the right solution.

Conclusion

Foundation models are transforming how AI is developed and applied. Instead of isolated single-purpose solutions, they provide the technological basis for versatile, scalable, and adaptive applications. However, their success depends on meaningful integration into existing processes as well as responsible and transparent use. In this way, foundation models contribute to creating intelligent systems that provide tangible relief for professionals and deliver sustainable competitive advantages for businesses.

Would you like to learn how foundation models can create value in your industry? Then connect with our AI experts or explore more application examples on our blog.

About our Expert

Sina Schäfer

Corporate Communications Manager

Sina Schäfer has been working as Corporate Communications Manager in Corporate Marketing at INFORM since 2021. Her focus is on external communication in the areas of inventory & supply chain, production and industrial logistics.